While at ARC, Fisher had met Thomas G. Zimmerman, the developer of a new kind of glove that could be used to measure the degree of bend or flex in each finger joint. Zimmerman had originally developed the glove with the intent of using it as an instrument to create music. By hooking it up to a computer that controlled a music synthesizer, wearers could play invisible instruments simply by moving their fingers. It was designed to be the ultimate “air guitar”!

Because it accurately tracked finger movements, Fisher realized that it could be used as an input device to create a virtual hand that would appear in the computer-generated worlds being developed at NASA. If you were wearing the glove and the NASA helmet and looked at your hand, you would see a caricature of it generated

by the computer. If you wiggled your fingers, the image would wiggle its fingers. For the first time, a representation of a person’s physical body would become part

of the simulation.

In 1983, Zimmerman teamed up with Jaron Lanier (who had also recently left Atari) to wed the glove technology to Lanier’s ideas of a virtual programming interface for non-programmers. This collaboration evolved into a company “VPL Research” which was officially founded in 1985. They called their first product a DataGlove. That

very same year, Fisher ordered one of the unique gloves for his work at NASA.

By the time the glove arrived in 1986, NASA’S VIVED group had grown to include Warren Robinett (an Atari video-game programmer and creator of Rocky’s Boots and the popular Atari Adventure game) and later Douglas Kerr, another programmer. Robinett used his programming skills to create dramatic demonstrations of the versatility of this new visualization tool.

He was, however, somewhat constrained by the less than state-of-the-art equipment McGreevy had assembled on his shoe-string budget. Just as Ivan Sutherland was limited to rendering worlds in glowing green vectors back in 1968, Robinett was limited to stick-figure and wire-frame representations of real-world objects. Making the most of the available tools, Robinett created simulations of architectural structures, haemoglobin molecules, the space shuttle and turbulent flow patterns.

By the end of 1986, the NASA team had assembled a virtual environment that allowed users to issue voice commands, hear synthesized speech and 3-D sound sources and manipulate virtual objects directly by grasping them with their hand No longer was the computer this separate thing you sat in front of and stared at you were now completely inside. You communicated with it by talking and gesturing instead of typing and swearing whenever you made a mistake and had to start at the beginning again.

Probably the most important thing NASA achieved was demonstrating that all this was possible by assembling an assortment of commercially available technologies that didn’t cost a fortune to acquire or develop. Pandora’s box had been sitting around for a while others had peeked into it, but it was NASA who threw it wide open.

Cybernauts venturing into NASA’s virtual worlds had to outfit themselves with a collection of gear that a scuba diver might recognize, particularly because the original design used a scuba-mask frame to mount the LCD displays. Instead of a glass window into the undersea world, the displays were glass windows into the virtual world The goal of the NASA design was to completely isolate the user from the outside world. Thomas Furness III had pioneered this same approach with his Darth Vader helmet (See Part 5). NASA also added headphones that further contributed to the isolation. Reality no longer intruded. The virtual world commanded all the user’s attention

The cybernaut’s lifeline was a series of cables that led from the headgear and DataGlove to an array of computers and control boxes. Just as early divers used compressor pumps and air hoses to provide access to their new world, virtual explorers were similarly connected to their reality-generating machines. In their exploration of these new virtual environments, cybernauts were like divers descending alone into the undersea realm.

Sticking your head into one of these early NASA HMDs, you would first notice the grainy or low-resolution image. It was like looking through a magnifying glass at a TV screen; you could see how the image was composed of individual points or picture elements. Because of this, the image quality was a great deal worse than anything usually seen on a computer monitor. This was called the ‘screen door effect‘ and it was still an issue even during the times of Oculus headsets.

Normally, you don’t notice that images on your TV or computer screen are composed of tiny dots. With the NASA display, it was glaringly obvious. As Myron Krueger has so aptly pointed out, the image quality was so poor that you would be declared legally blind in most states if you had a similar real-world vision.

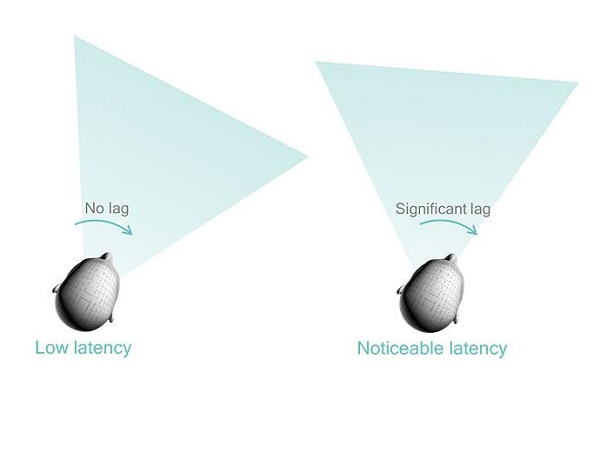

After adjusting to the low resolution, the next thing you would notice was that, by moving your head, the stick-figure representation of the world would also move. A sensor attached to the top of the HMD registered the position and orientation of your head. As you moved, the computer would query the sensor (every 60th of a second) and redraw the image.

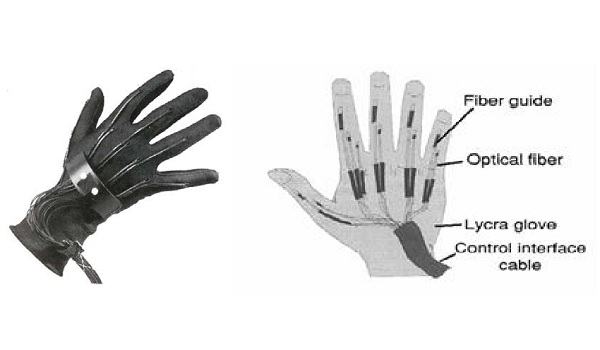

You would also notice that the image noticeably lagged behind your head movements (often called the “motion to photon”). If you quickly moved your head it would take about one-fifth of a second or 200 milliseconds for the computer to catch up. You soon learned to make slower head movements. It’s not fun being out of sync with reality. Holding up your gloved hand in front of you, you would see a simple, blocky, wireframe representation of a hand. You could turn it over and move your fingers and the disembodied hand would mimic the motion. Using fibre-optic sensors the computer knew exactly where your hand was and what movements your fingers made.

Seeing the representation of your hand suddenly changed your perspective. You now had a perceptual anchor in the virtual world. You felt like you were actually inside the computer because you could see your hand there and some people reported that it was quite an unnerving experience.

To move about this basic computer world, you simply pointed with one gloved finger in the appropriate direction and the angle of your thumb controlled the speed of your flight. The computer had been taught to recognize that gesture as representing the desire for movement. Other gestures were possible, for example, closing your fist it gripped any object that your hand intersected. As long as you kept your hand closed, the object stayed stuck to it. In fact, this form of interaction is still a big part of VR today with the grip button of motion controllers doing much the same thing.

What is also amazing is attached to the HMD was a small microphone that allowed you to give simple voice commands to the computer. Voice input was important because, once you put the HMD on, you could no longer use the keyboard or move the mouse (because you had the big glove on). All VR headsets today have the same thing, but there is a general trend to move away from voice commands and only use the built-in microphone to communicate with others.

So they had the technology to make a useable VR headset, motion controllers were a very real thing, but sound was still something that needed to be more immersive. The good news is it didn’t take NASA long to fix that issue as well!